THE INTERNET DEMOCRACY INITIATIVE

Recent Publications

Internet Democracy Initiative affiliates conduct research at the intersection of technology, media, and politics.

March 2025

Invisible Labor: The Backbone of Open Source Software

Robin A. Lange, Anna Gibson, Milo Z. Trujillo, & Brooke Foucault Welles

[Preprint]

Invisible labor is an intrinsic part of the modern workplace, and includes labor that is undervalued or unrecognized such as creating collaborative atmospheres. Open source software (OSS) is software that is viewable, editable and shareable by anyone with internet access. Contributors are mostly volunteers, who participate for personal edification and because they believe in the spirit of OSS rather than for employment. V olunteerism often leads to high personnel turnover, poor maintenance and inconsistent project management. This in turn, leads to a difficulty with sustainability long term. We believe that the key to sustainable management is the invisible labor that occurs behind the scenes. It is unclear how OSS contributors think about the invisible labor they perform or how that affects OSS sustainability. We interviewed OSS contributors and asked them about their invisible labor contributions, leadership departure, membership turnover and sustainability. We found that invisible labor is responsible for good leadership, reducing contributor turnover, and creating legitimacy for the project as an organization.

Learn More>>

March 2025

The truth sandwich format does not enhance the correction of misinformation

Briony Swire-Thompson, Lucy Butler, David N. Rapp

[Preprint]

The “truth sandwich” correction format, in which false information is bookended by factual information, has frequently been presented as an optimal method for correcting misinformation. Despite recurring recommendations, there is little empirical evidence for enhanced benefits. In two pre-registered experiments (total N = 1046), we evaluated the effectiveness of the truth sandwich correction format against a “bottom-loaded” refutation format, in which the misinformation is first presented prior to factual statements. In Experiment 1, participants first rated belief in cancer misinformation. The misinformation was then corrected using the truth sandwich, corrected using a bottom-loaded refutation, or left uncorrected (control). Participants subsequently rerated their belief in the claims. Experiment 2 replicated and extended Experiment 1 by including a two-week test delay. We found that both correction formats were highly effective. However, there was no evidence that the truth sandwich format enhanced the effectiveness of corrections either immediately after reading or after a two-week delay period, with Bayesian analyses providing consistent evidence for a null effect of correction format. We repeated our analyses isolated to participants who endorsed complementary and alternative medicines, given this subgroup is particularly likely to believe cancer misinformation. We again found no evidence for any superiority of the truth sandwich correction format. These findings suggest that clear and detailed corrections can be powerfully effective against misinformation regardless of format, and advocacy for the truth sandwich correction above other simpler formats are unwarranted.

Learn More>>

February 2025

Towards Preventing Intimate Partner Violence by Detecting Disagreements in SMS Communications

Mahesh Babu Kommalapati, Xiao Gu, Harshit Pandey, Christie J. Rizzo, Charlene Collibee, Silvio Amir, Aarti Sathyanarayana

Intimate Partner Violence (IPV) among ado- lescents is a major public health concern, par- ticularly for justice-involved adolescents which are at higher risk of experiencing IPV. Early detection of disagreements between romantic partners can provide an opportunity for just-in- time interventions to prevent escalation. Prior work has proposed methods for early detec- tion of disagreements based on metadata fea- tures of text message conversations. In this work, we build on these prior efforts and in- vestigate the impact of explicitly modeling the contents of text conversations for disagreement detection. We develop and evaluate super- vised classifiers that combine metadata features with sentiment and semantic features of texts and compare their performance against few- shot learning with instruction-tuned Large Lan- guage Models (LLMs). We conduct experi- ments on a dataset collected to study the com- munication patterns and risk factors associated with IPV among justice-involved adolescents. In addition, we measure models’ generalization to out-of-distribution samples using an exter- nal dataset comprising adolescents enrolled in child welfare services. We find that: (i) text- based features improve predictive performance but do not help models generalize to other pop- ulations; and (ii) LLMs struggle in this setting but can outperform supervised classifiers in out- of-distribution samples.

Learn More>>

January 2025

SoK: “Interoperability vs Security” Arguments: A Technical Framework

Daji Landis, Elettra Bietti, Sunoo Park

Concerns about big tech’s monopoly power have featured prominently in recent media and policy discourse, and regulators across the US, the EU, and beyond have ramped up efforts to promote healthier competition in the market. One of the favored approaches is to require certain kinds of in- teroperation between platforms, to mitigate the current concentration of power in the biggest companies. Unsurprisingly, interoperability initiatives have generally been met with vocal resistance by big tech companies. Perhaps more surprisingly, a significant part of that pushback has been in the name of security—that is, arguing against interoperation on the basis that it will undermine security.

We conduct a detailed examination of “security vs. interoperability” arguments in the context of recent antitrust proceedings in the US and the EU. First, we propose a taxonomy of such arguments. Second, we provide several detailed case studies, which illustrate our taxonomy’s utility in disentan- gling where security and interoperability are and are not in ten- sion, where securing interoperable systems presents novel en- gineering challenges, and where “security arguments” against interoperability are really more about anti-competitive behav- ior than security. Third, we undertake a comparative analysis that highlights key considerations around the interplay of economic incentives, market power, and security across diverse contexts where security and interoperability may appear to be in tension. We believe systematically distinguishing cases and patterns within our taxonomy and analytical framework can be a valuable analytical tool for experts and non-experts alike in today’s fast-paced regulatory landscape.

Learn More>>

January 2025

Mapping the Crowdsourcing Workforce in Latin America and the Caribbean

Gianna Williams, Maya De Los Santos, Alexandra To, Saiph Savage

Research has primarily focused on understanding the perspectives and experiences of US based crowdworkers, leaving a notable gap to those within the Global South. To bridge this, we conducted a survey with 100 crowdworkers across 16 Latin American and Caribbean countries. To understand the tensions between the crowdworking economy abroad. In our study we found crowd work being the stepping stone to financial and professional independence among those we surveyed along with the tensions within data extraction among crowdworkers.

Learn More>>

January 2025

DomainDemo: a dataset of domain-sharing activities among different demographic groups on Twitter

Kai-Cheng Yang, Pranav Goel, Alexi Quintana-Mathé, Luke Horgan, Stefan D. McCabe, Nir Grinberg, Kenneth Joseph, and David Lazer

Social media play a pivotal role in disseminating web content, particularly dur- ing elections, yet our understanding of the association between demographic factors and political discourse online remains limited. Here, we introduce a unique dataset, DomainDemo, linking domains shared on Twitter (X) with the demographic charac- teristics of associated users, including age, gender, race, political affiliation, and ge- olocation, from 2011 to 2022. This new resource was derived from a panel of over 1.5 million Twitter users matched against their U.S. voter registration records, facilitating a better understanding of a decade of information flows on one of the most prominent social media platforms and trends in political and public discourse among registered U.S. voters from different sociodemographic groups. By aggregating user demographic information onto the domains, we derive five metrics that provide critical insights into over 129,000 websites. In particular, the localness and partisan audience metrics quan- tify the domains’ geographical reach and ideological orientation, respectively. These metrics show substantial agreement with existing classifications, suggesting the effec- tiveness and reliability of DomainDemo’s approach.

Learn More>>

December 2024

The Diffusion and Reach of (Mis)Information on Facebook During the U.S. 2020 Election

Sandra González-Bailón, David Lazer, Pablo Barberá, William Godel, Hunt Allcott, Taylor Brown, Adriana Crespo-Tenorio, Deen Freelon, Matthew Gentzkow, Andrew M. Guess, Shanto Iyengar, Young Mie Kim, Neil Malhotra, Devra Moehler, Brendan Nyhan, Jennifer Pan, Carlos Velasco Rivera, Jaime Settle, Emily Thorson, Rebekah Tromble, Arjun Wilkins, Magdalena Wojcieszak, Chad Kiewiet de Jonge, Annie Franco, Winter Mason, Natalie Jomini Stroud, Joshua A. Tucker

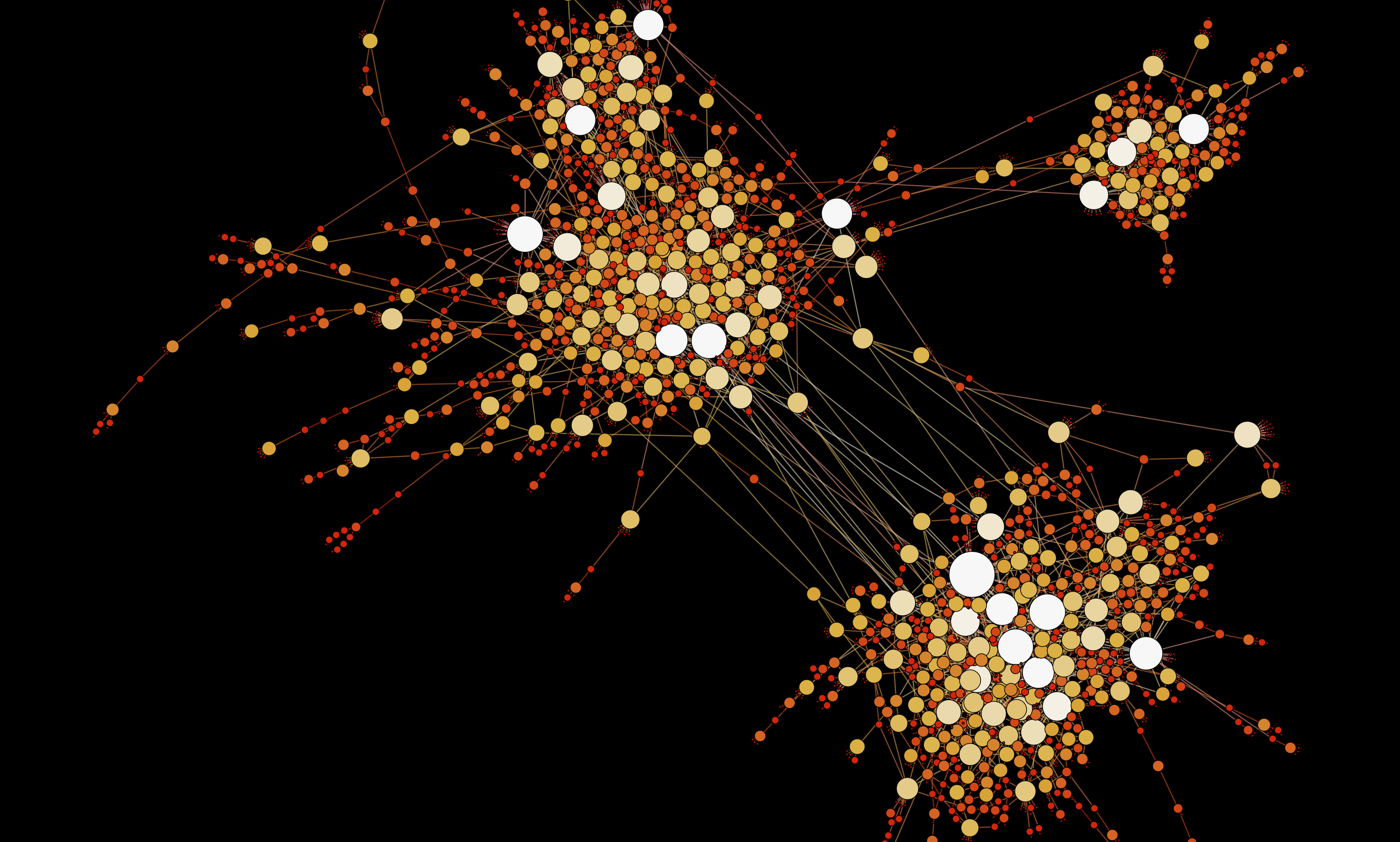

Social media creates the possibility for rapid, viral spread of content, but how many posts actually reach millions? And is misinformation special in how it propagates? We answer these questions by analyzing the virality of and exposure to information on Facebook during the U.S. 2020 presidential election. We examine the diffusion trees of the approximately 1 B posts that were re-shared at least once by U.S.-based adults from July 1, 2020, to February 1, 2021. We differentiate misinformation from non-misinformation posts to show that (1) misinformation diffused more slowly, relying on a small number of active users that spread misinformation via long chains of peer-to-peer diffusion that reached millions; non-misinformation spread primarily through one-to-many affordances (mainly, Pages); (2) the relative importance of peer-to-peer spread for misinformation was likely due to an enforcement gap in content moderation policies designed to target mostly Pages and Groups; and (3) periods of aggressive content moderation proximate to the election coincide with dramatic drops in the spread and reach of misinformation and (to a lesser extent) political content. Learn More>>

December 2024

Multimodal Drivers of Attention Interruption to Baby Product Video Ads

Wen Xie, Lingfei Luan, Yanjun Zhu, Yakov Bart & Sarah Ostadabbas

Ad designers often use sequences of shots in video ads, where frames are similar within a shot but vary across shots. These visual variations, along with changes in auditory and narrative cues, can interrupt viewers’ attention. In this paper, we address the underexplored task of applying multimodal feature extraction techniques to marketing problems. We introduce the “AttInfaForAd” dataset, containing 111 baby product video ads with visual ground truth labels indicating points of interest in the first, middle, and last frames of each shot, identified by 75 shoppers. We propose attention interruption measures and use multimodal techniques to extract visual, auditory, and linguistic features from video ads. Our feature-infused model achieved the lowest mean absolute error and highest R-square among various machine learning algorithms in predicting shopper attention interruption. We highlight the significance of these features in driving attention interruption. By open-sourcing the dataset and model code, we aim to encourage further research in this crucial area. Learn More>>

December 2024

AI Regulation: Competition, Arbitrage & Regulatory Capture

Filippo Lancieri, Laura Edelson & Stefan Bechtold

The commercial launch of ChatGPT in November 2022 and the fast development of Large Language Models catapulted the regulation of Artificial Intelligence to the forefront of policy debates. A vast body of scholarship, white papers, and other policy analyses followed, outlining ideal regulatory regimes for AI. The European Union and other jurisdictions moved forward by regulating AI and LLMs. One overlooked area is the political economy of these regulatory initiatives–or how countries and companies can behave strategically and use different regulatory levers to protect their interests in the international competition on how to regulate AI.

This Article helps fill this gap by shedding light on the tradeoffs involved in the design of AI regulatory regimes in a world where: (i) governments compete with other governments to use AI regulation, privacy, and intellectual property regimes to promote their national interests; and (ii) companies behave strategically in this competition, sometimes trying to capture the regulatory framework. We argue that this multi-level competition to lead AI technology will force governments and companies to trade off risks of regulatory arbitrage versus those of regulatory fragmentation. This may lead to pushes for international harmonization around clubs of countries that share similar interests. Still, international harmonization initiatives will face headwinds given the different interests and the high-stakes decisions at play, thereby pushing towards isolationism. To exemplify these dynamics, we build on historical examples from competition policy, privacy law, intellectual property, and cloud computing. Learn More>>

December 2024

A Case Study in an A.I.-Assisted Content Audit

Rahul Bhargava, Elisabeth Hadjis, Meg Heckman

This paper presents an experimental case study utilizing machine learning and generative AI to audit content diversity in a hyper- local news outlet, The Scope, based at a university and focused on underrepresented communities in Boston. Through computational text analysis, including entity extraction, topic labeling, and quote extraction and attribution, we evaluate the extent to which The Scope’s coverage aligns with its mission to amplify diverse voices. The results reveal coverage patterns, topical focus, and source demo- graphics, highlighting areas for improvement in editorial practices. This research underscores the potential for AI-driven tools to sup- port similar small newsrooms in enhancing content diversity and alignment with their community-focused missions. Future work en- visions developing a cost-effective auditing toolkit to aid hyperlocal publishers in assessing and improving their coverage. Learn More>>

November 2024

Social Media Algorithms Can Shape Affective Polarization via Exposure to Antidemocratic Attitudes and Partisan Animosity

Tiziano Piccardi, Martin Saveski, Chenyan Jia, Jeffrey T. Hancock, Jeanne L. Tsai, Michael Bernstein

There is widespread concern about the negative impacts of social media feed ranking algorithms on political polarization. Leveraging advancements in large language models (LLMs), we develop an approach to re-rank feeds in real-time to test the effects of content that is likely to polarize: expressions of antidemocratic attitudes and partisan animosity (AAPA). In a preregistered 10-day field experiment on X/Twitter with 1,256 consented participants, we increase or decrease participants’ exposure to AAPA in their algorithmically curated feeds. We observe more positive outparty feelings when AAPA exposure is decreased and more negative outparty feelings when AAPA exposure is increased. Exposure to AAPA content also results in an immediate increase in negative emotions, such as sadness and anger. The interventions do not significantly impact traditional engagement metrics such as re-post and favorite rates. These findings highlight a potential pathway for developing feed algorithms that mitigate affective polarization by addressing content that undermines the shared values required for a healthy democracy. Learn More>>

November 2024

When Randomness Beats Redundancy: Insights into the Diffusion of Complex Contagions

Allison Wan, Christoph Riedl, David Lazer

How does social network structure amplify or stifle behavior diffusion? Existing theory suggests that when social reinforcement makes the adoption of behavior more likely, it should spread more — both farther and faster — on clustered networks with redundant ties. Conversely, if adoption does not benefit from social reinforcement, then it should spread more on random networks without such redundancies. We develop a novel model of behavior diffusion with tunable probabilistic adoption and social reinforcement parameters to systematically evaluate the conditions under which clustered networks better spread a behavior compared to random networks. Using both simulations and analytical techniques we find precise boundaries in the parameter space where either network type outperforms the other or performs equally. We find that in most cases, random networks spread a behavior equally as far or farther compared to clustered networks despite strong social reinforcement. While there are regions in which clustered networks better diffuse contagions with social reinforcement, this only holds when the diffusion process approaches that of a deterministic threshold model and does not hold for all socially reinforced behaviors more generally. At best, clustered networks only outperform random networks by at least a five percent margin in 18\% of the parameter space, and when social reinforcement is large relative to the baseline probability of adoption. Learn More>>

October 2024

Public Perceptions of ChatGPT: Exploring How Nonexperts Evaluate Its Risks and Benefits

Sangwon Lee, Myojung Chung, Nuri Kim, S. Mo Jones-Jang, Nicole Krämer, and Rhonda McEwen

Despite the media hype and contentious debate surrounding generative artificial intelligence technologies, there is a dearth of research on how these technologies are perceived by the general public. This study aimed to bridge this gap by investigating (a) how people perceive the risks and benefits of ChatGPT and (b) the antecedents of such perceptions. A U.S. national survey (N = 1,004) found that individuals with higher education levels, interest in politics, knowledge about ChatGPT, leftist ideology, a sense of personal relevance, and a skeptical view of science tend to perceive greater risks associated with ChatGPT. These results challenge the conventional “knowledge deficit” model, suggesting that negative perceptions of technology are not merely a result of insufficient knowledge; such perceptions can also stem from a critical mindset that approaches artificial intelligence technology with caution. In contrast, individuals who have previously used ChatGPT, regard it as personally relevant to their lives, and show a keen interest in new media technologies in general tend to recognize its benefits. These patterns suggest that risk perceptions involve more complex cognitive information processing, while benefit perceptions often arise from relatively intuitive decision-making processes. Our findings underscore the vital role of science communication and education in facilitating informed discussions about the risks and benefits of emerging technologies like ChatGPT. Learn More>>

October 2024

IoT Bricks Over v6: Understanding IPv6 Usage in Smart Homes

Tianrui Hu, Daniel J. Dubois, David Choffnes

Recent years have seen growing interest and support for IPv6 in residential networks. While nearly all modern networking devices and operating systems support IPv6, it remains unclear how this basic support translates into higher-layer functionality, privacy, and security in consumer IoT devices. In this paper, we present the first comprehensive study of IPv6 usage in smart homes in a testbed equipped with 93 distinct, popular consumer IoT devices. We investigate whether and how they support and use IPv6, focusing on factors such as IPv6 addressing, configuration, DNS and destinations, and privacy and security practices.

We find that, despite most devices having some degree of IPv6 support, in an IPv6-only network just 20.4% transmit data to Internet IPv6 destinations, and only 8.6% remain functional, indicating that consumer IoT devices are not yet ready for IPv6 networks. Furthermore, 16.1% of devices use easily traceable IPv6 addresses, posing privacy risks. Our findings highlight the inadequate IPv6 support in consumer IoT devices compared to conventional devices such as laptops and mobile phones. This gap is concerning, as it may lead to not only usability issues but also privacy and security risks for smart home users. Learn More>>

October 2024

Human Computation, Equitable, and Innovative Future of Work AI Tools

Kashif Imteyaz, Claudia Flores Saviaga, Saiph Savage

As we enter an era where the synergy between AI technologies and human effort is paramount, the Future of Work is undergoing a radical transformation. Emerging AI tools will profoundly influence how we work, the tools we use, and the very nature of work itself. The ’Human Computation, Equitable, and Innovative Future of Work AI Tools’ workshop at HCOMP’24 aims to explore groundbreaking solutions for developing fair and inclusive AI tools that shape how we will work. This workshop will delve into the collaborative potential of human computation and artificial intelligence in crafting equitable Future of Work AI tools. Participants will critically examine the current challenges in designing fair and innovative AI systems for the evolving workplace, as well as strategies for effectively integrating human insights into these tools. The primary objective is to foster a rich discourse on scalable, sustainable solutions that promote equitable Future of Work tools for all, with a particular focus on empowering marginalized communities. By bringing together experts from diverse fields, we aim to catalyze ideas that bridge the gap between technological advancement and social equity. Learn More>>

September 2024

Collaboration, crowdsourcing, and misinformation

Chenyan Jia, Angela Yuson Lee, Ryan C Moore, Cid Halsey-Steve Decatur, Sunny Xun Liu, Jeffrey T Hancock

One of humanity’s greatest strengths lies in our ability to collaborate to achieve more than we can alone. Just as collaboration can be an important strength, humankind’s inability to detect deception is one of our greatest weaknesses. Recently, our struggles with deception detection have been the subject of scholarly and public attention with the rise and spread of misinformation online, which threatens public health and civic society. Fortunately, prior work indicates that going beyond the individual can ameliorate weaknesses in deception detection by promoting active discussion or by harnessing the “wisdom of crowds.” Can group collaboration similarly enhance our ability to recognize online misinformation? We conducted a lab experiment where participants assessed the veracity of credible news and misinformation on social media either as an actively collaborating group or while working alone. Our results suggest that collaborative groups were more accurate than individuals at detecting false posts, but not more accurate than a majority-based simulated group, suggesting that “wisdom of crowds” is the more efficient method for identifying misinformation. Our findings reorient research and policy from focusing on the individual to approaches that rely on crowdsourcing or potentially on collaboration in addressing the problem of misinformation. Learn More>>

September 2024

Misinformation and higher-order evidence

Brian Ball, Alexandros Koliousis, Amil Mohanan & Mike Peacey

This paper uses computational methods to simultaneously investigate the epistemological effects of misinformation on communities of rational agents, while also contributing to the philosophical debate on ‘higher-order’ evidence (i.e. evidence that bears on the quality and/or import of one’s evidence). Modelling communities as networks of individuals, each with a degree of belief in a given target proposition, it simulates the introduction of unreliable mis- and disinformants, and records the epistemological consequences for these communities. First, using small, artificial networks, it compares the effects, when agents who are aware of the prevalence of mis- or disinformation in their communities, either deny the import of this higher-order evidence, or attempt to accommodate it by distrusting the information in their environment. Second, deploying simulations on a large(r) real-world network, it observes the impact of increasing levels of misinformation on trusting agents, as well as of more minimal, but structurally targeted, unreliability. Comparing the two information processing strategies in an artificial context, it finds that there is a (familiar) trade-off between accuracy (in arriving at a correct consensus) and efficiency (in doing so in a timely manner). And in a more realistic setting, community confidence in the truth is seen to be depressed in the presence of even minimal levels of misinformation. Learn More>>

September 2024

Discrediting Health Disinformation Sources: Advantages of Highlighting Low Expertise

Briony Swire-Thompson, Kristen Kilgallen, Mitch Dobbs, Jacob Bodenger, John Wihbey, and Skyler Johnson

Disinformation is false information spread intentionally, and it is particularly harmful for public health. We conducted three preregistered experiments (N = 1,568) investigating how to discredit dubious health sources and disinformation attributed to them. Experiments 1 and 2 used cancer information and recruited representative U.S. samples. Participants read a vignette about a seemingly reputable source and rated their credibility. Participants were randomly assigned to a control condition or interventions that (a) corrected the source’s disinformation, (b) highlighted the source’s low expertise, or (c) corrected disinformation and highlighted low expertise (Experiment 2). Next, participants rated their belief in the source’s disinformation claims and rerated their credibility. We found that highlighting low expertise was equivalent to (or more effective than) other interventions for reducing belief in disinformation. Highlighting low expertise was also more effective than correcting disinformation for reducing source credibility, although combining it with correcting disinformation outperformed low expertise alone (Experiment 2). Experiment 3 extended this paradigm to vaccine information in vaccinated and unvaccinated subgroups. A conflict-of-interest intervention and 1 week retention interval were also added. Highlighting low expertise was the most effective intervention in both vaccinated and unvaccinated participants for reducing belief in disinformation and source credibility. It was also the only condition where belief change was sustained over 1 week, but only in the vaccinated subgroup. In sum, highlighting a source’s lack of expertise is a promising option for fact-checkers and health practitioners to reduce belief in disinformation and perceived credibility. Learn More>>

September 2024

Not always as advertised: Different effects from viewing safer gambling (harm prevention) adverts on gambling urges

Philip Newall, Leonardo Weiss-Cohen, Jamie Torrance, Yakov Bart

Public concern around gambling advertising in the UK has been met not by government action but by industry self-regulations, such as a forthcoming voluntary ban on front-of-shirt gambling sponsorship in Premier League soccer. “Safer gambling” (harm prevention) adverts are one recent example, and are TV commercials which inform viewers about gambling-related harm. The present work is the first independent evaluation of safer gambling adverts by both gambling operators and a charity called GambleAware. In an online experiment, we observed the change in participants’ (N = 2,741) Gambling Urge Scale (GUS) scores after viewing either: a conventional financial inducement gambling advert, a gambling operator’s safer gambling advert, an advert from the GambleAware “bet regret” campaign, an advert from the GambleAware “stigma reduction” campaign, or a control advert that was not about gambling. Relative to a neutral control advert, GUS scores increased after viewing a financial inducement or an operator’s safer gambling advert. In comparison to the neutral control condition, GUS score changes were similar after viewing a bet regret advert, but showed a significant decrease after viewing a stigma reduction advert. Those at higher risk of harm reported larger decreases in GUS after watching a bet regret or stigma reduction advert. Overall, this study introduced a novel experimental paradigm for evaluating safer gambling adverts, uncovered a potential downside from gambling operators’ safer gambling adverts, and revealed variation in the potential effectiveness of charity-delivered safer gambling adverts. Learn More>>

August 2024

Representation in Science and Trust in Scientists in the United States

James Druckman, Katherine Ognyanova, Alauna Safarpour, Jonathan Schulman, Kristin Lunz Trujillo, Ata Aydin Uslu, Jon Green, Matthew Baum, Alexi Quintana Mathé, Hong Qu, Roy Perlis, & David Lazer

American scientists are notably unrepresentative of the population. The disproportionately small number of scientists who are women, Black, Hispanic or Latino, from rural areas, religious, and from lower socioeconomic backgrounds has consequences. Speci cally, it means that, relative to their counterparts, individuals who identify as such are more dissimilar and more socially distant from scientists. These individuals, in turn, have less trust in scientists, which has palpable implications for health decisions and, potentially, mortality. Increasing the presence of underrepresented groups among scientists can increase trust, highlighting a vital bene t of diversifying science. This means expanding representation across several divides—not just gender and race but also rurality and socioeconomic circumstances. Learn More>>

August 2024

The algorithmic knowledge gap within and between countries: Implications for combatting misinformation

Myojung Chung & John Wihbey

While understanding how social media algorithms operate is essential to protect oneself from misinformation, such understanding is often unevenly distributed. This study explores the algorithmic knowledge gap both within and between countries, using national surveys in the United States (N = 1,415), the United Kingdom (N = 1,435), South Korea (N = 1,798), and Mexico (N = 784). In all countries, algorithmic knowledge varied across different sociodemographic factors, even though in different ways. Also, different countries had different levels of algorithmic knowledge: The respondents in the United States reported the greatest algorithmic knowledge, followed by respondents in the United Kingdom, Mexico, and South Korea. Additionally, individuals with greater algorithmic knowledge were more inclined to take actions against misinformation. Learn More>>

August 2024

Prevalence and correlates of irritability among U.S. adults

Roy H. Perlis, Ata Uslu, Jonathan Schulman, Aliayah Himelfarb, Faith M. Gunning, Nili Solomonov, Mauricio Santillana, Matthew A. Baum, James N. Druckman, Katherine Ognyanova & David Lazer

This study aimed to characterize the prevalence of irritability among U.S. adults, and the extent to which it co-occurs with major depressive and anxious symptoms. A non-probability internet survey of individuals 18 and older in 50 U.S. states and the District of Columbia was conducted between November 2, 2023, and January 8, 2024. Regression models with survey weighting were used to examine associations between the Brief Irritability Test (BITe5) and sociodemographic and clinical features. The survey cohort included 42,739 individuals, mean age 46.0 (SD 17.0) years; 25,001 (58.5%) identified as women, 17,281 (40.4%) as men, and 457 (1.1%) as nonbinary. A total of 1218(2.8%) identified as Asian American, 5971 (14.0%) as Black, 5348 (12.5%) as Hispanic, 1775 (4.2%) as another race, and 28,427 (66.5%) as white. Mean irritability score was 13.6 (SD 5.6) on a scale from 5 to 30. In linear regression models, irritability was greater among respondents who were female, younger, had lower levels of education, and lower household income. Greater irritability was associated with likelihood of thoughts of suicide in logistic regression models adjusted for sociodemographic features (OR 1.23, 95% CI 1.22–1.24). Among 1979 individuals without thoughts of suicide on the initial survey assessed for such thoughts on a subsequent survey, greater irritability was also associated with greater likelihood of thoughts of suicide being present (adjusted OR 1.17, 95% CI 1.12–1.23). The prevalence of irritability and its association with thoughts of suicide suggests the need to better understand its implications among adults outside of acute mood episodes. Learn More>>

August 2024

Narrative reversals and story success

Samsun Knight, Matthew D. Rockledge, Yakov Bart

Storytelling is a powerful tool that connects us and shapes our understanding of the world. Theories of effective storytelling boast an intellectual history dating back millennia, highlighting the significance of narratives across civilizations. Yet, despite all this theorizing, empirically predicting what makes a story successful has remained elusive. We propose narrative reversals, key turning points in a story, as pivotal facets that predict story success. Drawing on narrative theory, we conceptualize reversals as plot: essential moments that push narratives forward and shape audience reception. Across 30,000 movies, TV shows, novels, and fundraising pitches, we use computational linguistics and trend detection analysis to develop a quantitative method for measuring narrative reversals via shifts in valence. We find that stories with more‚ and more dramatic, turning points are more successful. Our findings shed light on this age-old art form and provide a practical approach to understanding and predicting the impact of storytelling. Learn More>>

July 2024

Internal Fractures: The Competing Logics of Social Media Platforms

Angèle Christin, Michael S. Bernstein, Jeffrey T. Hancock, Chenyan Jia, Marijn N. Mado, Jeanne L. Tsai, and Chunchen Xu

Social media platforms are too often understood as monoliths with clear priorities. Instead, we analyze them as complex organizations torn between starkly different justifications of their missions. Focusing on the case of Meta, we inductively analyze the company’s public materials and identify three evaluative logics that shape the platform’s decisions: an engagement logic, a public debate logic, and a wellbeing logic. There are clear trade-offs between these logics, which often result in internal conflicts between teams and departments in charge of these different priorities. We examine recent examples showing how Meta rotates between logics in its decision-making, though the goal of engagement dominates in internal negotiations. We outline how this framework can be applied to other social media platforms such as TikTok, Reddit, and X. We discuss the ramifications of our findings for the study of online harms, exclusion, and extraction. Learn More>>

July 2024

A Culturally-Aware Tool for Crowdworkers: Leveraging Chronemics to Support Diverse Work Styles

Carlos Toxtli, Christopher Curtis, Saiph Savage

Crowdsourcing markets are expanding worldwide, but often feature standardized interfaces that ignore the cultural diversity of their workers, negatively impacting their well-being and productivity. To transform these workplace dynamics, this paper proposes creating culturally-aware workplace tools, specifically designed to adapt to the cultural dimensions of monochronic and polychronic work styles. We illustrate this approach with “CultureFit,” a tool that we engineered based on extensive research in Chronemics and culture theories. To study and evaluate our tool in the real world, we conducted a field experiment with 55 workers from 24 different countries. Our field experiment revealed that CultureFit significantly improved the earnings of workers from cultural backgrounds often overlooked in design. Our study is among the pioneering efforts to examine culturally aware digital labor interventions. It also provides access to a dataset with over two million data points on culture and digital work, which can be leveraged for future research in this emerging field. The paper concludes by discussing the importance and future possibilities of incorporating cultural insights into the design of tools for digital labor. Learn More>>

July 2024

Open (Clinical) LLMs are Sensitive to Instruction Phrasings

Alberto Mario Ceballos Arroyo, Monica Munnangi, Jiuding Sun, Karen Y.C. Zhang, Denis Jered McInerney, Byron C. Wallace,  Silvio Amir

Silvio Amir

Instruction-tuned Large Language Models (LLMs) can perform a wide range of tasks given natural language instructions to do so, but they are sensitive to how such instructions are phrased. This issue is especially concerning in healthcare, as clinicians are unlikely to be experienced prompt engineers and the potential consequences of inaccurate outputs are heightened in this domain.

This raises a practical question: How robust are instruction-tuned LLMs to natural variations in the instructions provided for clinical NLP tasks? We collect prompts from medical doctors across a range of tasks and quantify the sensitivity of seven LLMs — some general, others specialized — to natural (i.e., non-adversarial) instruction phrasings. We find that performance varies substantially across all models, and that — perhaps surprisingly — domain-specific models explicitly trained on clinical data are especially brittle, compared to their general domain counterparts. Further, arbitrary phrasing differences can affect fairness, e.g., valid but distinct instructions for mortality prediction yield a range both in overall performance, and in terms of differences between demographic groups. Learn More >>

July 2024

Measuring Enlarged Mentality: Development and Validation of the Enlarged Mentality Scale

Nuri Kim, Elizabeth Demissie Degefe, S Mo Jones-Jang,  Myojung Chung

Myojung Chung

Hannah Arendt’s concept of enlarged mentality (also referred to as representative thinking) has received much attention from theorists and philosophers, but it has not been a central concept in the empirical political communication literature. This article explicates the concept of enlarged mentality and argues for its relevance to political communication theory and research. Specifically, we developed and validated a 10-item survey instrument to measure orientation towards enlarged thinking among individuals. The Enlarged Mentality Scale was validated in two national samples (Singapore, United Kingdom) and was found to show predictive validity toward deliberative and political engagement variables. We offer suggestions on how the measure of enlarged mentality could be useful for different areas of political communication research. Learn More >>

July 2024

Trust in Physicians and Hospitals During the COVID-19 Pandemic in a 50-State Survey of US Adults

Roy H. Perlis, Katherine Ognyanova, Ata Uslu, Kristin Lunz Trujillo, Mauricio Santillana, James N. Druckman, Matthew A. Baum,  David Lazer

David Lazer

Trust in physicians and hospitals has been associated with achieving public health goals, but the increasing politicization of public health policies during the COVID-19 pandemic may have adversely affected such trust.

The combined data included 582634 responses across 24 survey waves, reflecting 443 455 unique respondents. The unweighted mean (SD) age was 43.3 (16.6) years; 288 186 respondents (65.0%) reported female gender; 21 957 (5.0%) identified as Asian American, 49 428 (11.1%) as Black, 38 423 (8.7%) as Hispanic, 3138 (0.7%) as Native American, 5598 (1.3%) as Pacific Islander, 315 278 (71.1%) as White, and 9633 (2.2%) as other race and ethnicity (those who selected “Other” from a checklist). Overall, the proportion of adults reporting a lot of trust for physicians and hospitals decreased from 71.5% (95% CI, 70.7%-72.2%) in April 2020 to 40.1% (95% CI, 39.4%-40.7%) in January 2024. In regression models, features associated with lower trust as of spring and summer 2023 included being 25 to 64 years of age, female gender, lower educational level, lower income, Black race, and living in a rural setting. These associations persisted even after controlling for partisanship. In turn, greater trust was associated with greater likelihood of vaccination for SARS-CoV-2 (adjusted odds ratio [OR], 4.94; 95 CI, 4.21-5.80) or influenza (adjusted OR, 5.09; 95 CI, 3.93-6.59) and receiving a SARS-CoV-2 booster (adjusted OR, 3.62; 95 CI, 2.99-4.38).

June 2024

Post-January 6th deplatforming reduced the reach of misinformation on Twitter

Stefan D. McCabe, Diogo Ferrari, Jon Green,  David Lazer & Kevin M. Esterling

David Lazer & Kevin M. Esterling

The social media platforms of the twenty-first century have an enormous role in regulating speech in the USA and worldwide. However, there has been little research on platform-wide interventions on speech. Here we evaluate the effect of the decision by Twitter to suddenly deplatform 70,000 misinformation traffickers in response to the violence at the US Capitol on 6 January 2021 (a series of events commonly known as and referred to here as ‘January 6th’). Using a panel of more than 500,000 active Twitter users and natural experimental designs, we evaluate the effects of this intervention on the circulation of misinformation on Twitter. Learn More >>

May 2024

Market or Markets? Investigating Google Search’s Market Shares under Vertical Segmentation

Desheng Hu, Jeffrey Gleason, Muhammad Abu Bakar Aziz, Alice Koeninger, Nikolas Guggenberger, Ronald E. Robertson, &  Christo Wilson

Christo Wilson

Is Google Search a monopoly with gatekeeping power? Regulators from the US, UK, and Europe have argued that it is based on the assumption that Google Search dominates the market for horizontal (a.k.a. “general”) web search. Google disputes this, claiming that competition extends to all vertical (a.k.a. “specialized”) search engines, and that under this market definition it does not have monopoly power. In this study we present the first analysis of Google Search’s market share under vertical segmentation of online search. We leverage observational trace data collected from a panel of US residents that includes their web browsing history and copies of the Google Search Engine Result Pages they were shown.We observe that participants’ search sessions begin at Google greater than 50% of the time in 24 out of 30 vertical market segments (which comprise almost all of our participants’ searches). Our results inform the consequential and ongoing debates about the market power of Google Search and the conceptualization of online markets in general. Learn More >>

April 2024

The Facebook Algorithm's Active Role in Climate Advertisement Delivery

Aruna Sankaranarayanan, Erik Hemberg,  Piotr Sapiezynski, and Una-May O’Reilly

Piotr Sapiezynski, and Una-May O’Reilly

Communication strongly influences attitudes toward climate change. In the advertising ecosystem, we can distinguish actors with adversarial stances: organizations with contrarian or advocacy communication goals towards climate action, who direct the advertisement delivery algorithm to launch ads in different destinations by specifying targets and campaign objectives. We present a 2 part study; part 1 is an observational study and part 2 is a controlled study. Collectively, they indicate that the advertising delivery algorithm could be actively influencing climate discourse, asserting statistically significant control over advertisement destinations, characterized by U.S. state, gender type, or age range. Further, algorithmic decision-making might be affording a cost advantage to climate contrarians. Learn More >>

April 2024

Journalistic interventions matter: Understanding how Americans perceive fact-checking labels

Chenyan Jia and Taeyoung Lee

Chenyan Jia and Taeyoung Lee

While algorithms and crowdsourcing have been increasingly used to debunk or label misinformation on social media, such tasks might be most effective when performed by professional fact checkers or journalists. Drawing on a national survey (N = 1,003), we found that U.S. adults evaluated fact-checking labels created by professional fact checkers as more effective than labels by algorithms and other users. News media labels were perceived as more effective than user labels but not statistically different from labels by fact checkers and algorithms. There was no significant difference between labels created by users and algorithms. These findings have implications for platforms and fact-checking practitioners, underscoring the importance of journalistic professionalism in fact-checking. Learn More >>

April 2024

Cancer: A model topic for misinformation researchers. Current Opinion in Psychology

Briony Swire-Thompson and Skyler Johnson

Briony Swire-Thompson and Skyler Johnson

Although cancer might seem like a niche subject, we argue that it is a model topic for misinformation researchers, and an ideal area of application given its importance for society. We first discuss the prevalence of cancer misinformation online and how it has the potential to cause harm. We next examine the financial incentives for those who profit from disinformation dissemination, how people with cancer are a uniquely vulnerable population, and why trust in science and medical professionals is particularly relevant to this topic. We finally discuss how belief in cancer misinformation has clear objective consequences and can be measured with treatment adherence and health outcomes such as mortality. In sum, cancer misinformation could assist the characterization of misinformation beliefs and be used to develop tools to combat misinformation in general. Learn More >>

March 2024

Who is using ChatGPT and why? Extending the Unified Theory of Acceptance and Use of Technology (UTAUT) model

Sangwon Lee, S. Mo Jones-Jang,  Myojung Chung, Nuri Kim, and Jihyang Choi

Myojung Chung, Nuri Kim, and Jihyang Choi

Since its public launch, ChatGPT has gained the world’s attention, demonstrating the immense potential of artificial intelligence (AI). To explore factors influencing the adoption of ChatGPT, we ran structural equation modelling to test the unified theory of acceptance and use of technology model while incorporating relative risk (vs. benefit) perception and emotional factors into its original form to gain a better understanding of the process. The findings revealed that in addition to individuals’ technology-specific perceptions (i.e., performance expectancy, effort expectancy, social influence, and facilitating conditions), relative risk perception and emotional factors play significant roles in predicting favourable attitude and behaviour intentions towards ChatGPT. Learn More >>

February 2024

"AI and National Security", Handbook of Artificial Intelligence at Work

Saiph Savage, Gabriela Avila, Norma Elva Chávez, and Martha Garcia-Murillo

Saiph Savage, Gabriela Avila, Norma Elva Chávez, and Martha Garcia-Murillo

In this chapter we provide an overview of the impact that AI is having in national security and the new opportunities and challenges that are emerging in the space. For this purpose, we first provide a history of technology for national security. Next, we describe how AI is being used in various national security contexts and the key actors involved in the creation and development of military AI. We go on to discuss how to design AI for the military, especially for national security. We finish by presenting an agenda for AI for national security, highlighting key areas that bear looking into across the globe. Learn More >>

February 2024

Complex network effects on the robustness of graph convolutional networks

Benjamin A. Miller, Kevin Chan &  Tina Eliassi-Rad

Tina Eliassi-Rad

Vertex classification using graph convolutional networks is susceptible to targeted poisoning attacks, in which both graph structure and node attributes can be changed in an attempt to misclassify a target node. This vulnerability decreases users’ confidence in the learning method and can prevent adoption in high-stakes contexts. Defenses have been proposed, focused on filtering edges before creating the model or aggregating information from neighbors more robustly. This paper considers an alternative: we investigate the ability to exploit network phenomena in the training data selection process to improve classifier robustness. We propose two alternative methods of selecting training data: (1) to select the highest-degree nodes and (2) to select nodes with many connections to the test data. In four real datasets, we show that changing the training set often results in far more perturbations required for a successful attack on the graph structure; often a factor of 2 over the random training baseline. We also run a simulation study in which we demonstrate conditions under which the proposed methods outperform random selection, finding that they improve performance most when homophily is higher, clustering coefficient is higher, node degrees are more homogeneous, and attributes are less informative. In addition, we show that the methods are effective when applied to adaptive attacks, alleviating concerns about generalizability.

February 2024

The Data-Attention Imperative

Elettra Bietti

Elettra Bietti

Today’s digital technologies are transforming the quantification, allocation and monetization of human time and attention. Motivated by a variety of technical and social pressures, the average American spends more than eight hours a day consuming digital media on their computer or phone. Social media overuse has been held responsible for a teenage mental health crisis, a rise in teen suicides and a more general degradation of collective attention processes essential in a political economy and democracy. In the midst of the current attentional crisis, existing bodies of law such as privacy, antitrust and free speech fail to assist us in grappling with concerns about technology overuse, addiction, technology-mediated attention disorders and the pervasive degradation of our individual and collective attention. It is tempting to reduce these disorders to problems of individual choice, delegating solutions to market-based tools or the exercise of individual data protection and speech rights. Instead, the answer requires moving past simplistic views of the market as a self-correcting device guided by individual preferences, and of data within them.

This paper focuses on the role of data in producing a progressive thinning, stretching and erosion of human attention. It argues that understanding the relation between datafication and attention can pave the way toward better law and policy in this area.

January 2024

Social Media's New Referees?: Public Attitudes Toward AI Content Moderation Bots Across Three Countries

John Wihbey and Garrett Morrow

John Wihbey and Garrett Morrow

Based on representative national samples of ~1,000 respondents per country, we assess how people in three countries, the United Kingdom, the United States, and Canada, view the use of new artificial intelligence (AI) technologies such as large language models by social media companies for the purposes of content moderation. We find that about half of survey respondents across the three countries indicate that it would be acceptable for company chatbots to start public conversations with users who appear to violate rules or platform community guidelines. Persons who have more regular experiences with consumer-facing chatbots are less likely to be worried in general about the use of these technologies on social media. However, the vast majority of persons (80%+) surveyed across all three countries worry that if companies deploy chatbots supported by generative AI and engage in conversations with users, the chatbots may not understand context, may ruin the social experience of connecting with other humans, and may make flawed decisions. This study raises questions about a potential future where humans and machines interact a great deal more as common actors on the same technical surfaces, such as social media platforms.

January 2024

Computational philosophy: reflections on the PolyGraphs project

Brian Ball, Alexandros Koliousis, Amil Mohanan & Mike Peacey

Brian Ball, Alexandros Koliousis, Amil Mohanan & Mike Peacey

In this paper, we situate our computational approach to philosophy relative to other digital humanities and computational social science practices, based on reflections stemming from our research on the PolyGraphs project in social epistemology. We begin by describing PolyGraphs. An interdisciplinary project funded by the Academies (BA, RS, and RAEng) and the Leverhulme Trust, it uses philosophical simulations (Mayo-Wilson and Zollman, 2021) to study how ignorance prevails in networks of inquiring rational agents. We deploy models developed in economics (Bala and Goyal, 1998), and refined in philosophy (O’Connor and Weatherall, 2018; Zollman, 2007), to simulate communities of agents engaged in inquiry, who generate evidence relevant to the topic of their investigation and share it with their neighbors, updating their beliefs on the evidence available to them.

January 2024

Data Deserts and Black Boxes: The Impact of Socio-Economic Status on Consumer Profiling

Nico Neumann , Catherine E. Tucker , Levi Kaplan , Alan Mislove,  Piotr Sapiezynski

Piotr Sapiezynski

Data brokers use black-box methods to profile and segment individuals for ad targeting, often with mixed success. We present evidence from 5 complementary field tests and 15 data brokers that differences in profiling accuracy and coverage for these attributes mainly depend on who is being profiled. Consumers who are better off—for example, those with higher incomes or living in affluent areas—are both more likely to be profiled and more likely to be profiled accurately. Occupational status (white-collar versus blue-collar jobs), race and ethnicity, gender, and household arrangements often affect the accuracy and likelihood of having profile information available, although this varies by country and whether we consider online or offline coverage of profile attributes. Our analyses suggest that successful consumer-background profiling can be linked to the scope of an individual’s digital footprint from how much time they spend online and the number of digital devices they own. Those who come from lower-income backgrounds have a narrower digital footprint, leading to a “data desert” for such individuals. Vendor characteristics, including differences in profiling methods, explain virtually none of the variation in profiling accuracy for our data, but explain variation in the likelihood of who is profiled. Vendor differences due to unique networks and partnerships also affect profiling outcomes indirectly due to differential access to individuals with different backgrounds. We discuss the implications of our findings for policy and marketing practice.

December 2023

Measuring Targeting Effectiveness in TV Advertising: Evidence from 313 Brands

Samsun Knight, Tsung-Yiou Hsieh, and  Yakov Bart

Yakov Bart

We estimate the heterogeneous effects of TV advertising on revenues of physical retail stores and restaurants in the United States for 313 brands using a novel panel of store-level revenue data and a two-way fixed effects design. We find a mean revenue elasticity to TV advertising of 0.094 and a median elasticity of 0.044, along with a significant estimated S-curvature in the marginal effect of advertising. We document significant heterogeneity in estimated effective- ness across store-level characteristics, and in particular find that advertising is more effective for stores in denser areas and for stores in areas with higher numbers of competitor locations. We then use these heterogeneity estimates to construct optimal allocations of ad expenditure across DMAs and project that these counterfactual reallocations would increase returns on advertising by a median of 4.3% and a mean of 22.1%. This study advances recent research demonstrating that TV advertising is less effective than is generally assumed by highlighting the role of suboptimal geographic targeting and by quantifying how much realized effectiveness can understate advertising’s potential effect.

December 2023

You want a piece of me: Britney Spears as a case study on the prominence of hegemonic tales and subversive stories in online media

Alyssa Hasegawa Smith, Adina Gitomer, and  Brooke Foucault Welles

Brooke Foucault Welles

In this work, we seek to understand how hegemonic and subversive (counter-hegemonic) stories about gender and control are constructed across and between media platforms. To do so, we examine how American singer-songwriter Britney Spears is framed in the stories that tabloid journalists, Wikipedia editors, and Twitter users tell about her online. Using Spears’ portrayal as a case study, we hope to better understand how subversive stories come to prominence online, and how platform affordances and incentives can encourage or discourage their emergence. We draw upon previous work on the portrayal of women and mental illness in news and tabloid media, as well as work on narrative formation on Wikipedia. Using computational methods and critical readings of key articles, we find that Twitter, as a source of the #FreeBritney hashtag, continually supports counter-hegemonic narratives during periods of visibility, while both the tabloid publication TMZ and Wikipedia may lag in their adoption of the same.

December 2023

Inequalities in Online Representation: Who Follows Their Own Member of Congress on Twitter?

Stefan McCabe, Jon Green, Pranav Goel, and  David Lazer

David Lazer

Members of Congress increasingly rely on social media to communicate with their constituents and other members of the public in real time. However, despite their increased use, little is known about the composition of members’ audiences in these online spaces. We address these questions using a panel of Twitter users linked to their congressional district of residence through administrative data. We provide evidence that Twitter users who followed their own representative in the 115th, 116th, and 117th Congresses were generally older and more partisan, and live in wealthier areas of those districts, compared to those who did not. We further find that shared partisanship and shared membership in historically marginalized groups are associated with an increased probability of a constituent following their congressional representative. These results suggest that the efficiency of communication offered by social media reproduces, rather than alters, patterns of political polarization and class inequalities in representation observed offline.

December 2023

Inequalities in Online Representation: Who Follows Their Own Member of Congress on Twitter?

Chenyan Jia, Martin J. Riedl, and Samuel Woo

Chenyan Jia, Martin J. Riedl, and Samuel Woo

Automated tools for parsing and communicating information have increasingly become associated with the production of journalistic content. To study this phenomenon and to explore the development of automated journalism across two locales at the cutting edge of technology, we leverage insights from in-depth interviews with news technologists from pioneering news organizations and Internet companies specialized in the construction of “news bot” technology in the United States and China, including The Associated Press, The New York Times, The Atlanta Journal-Constitution, BuzzFeed, Quartz, Xinhua Zhiyun, Southern Metropolis Daily, Toutiao, and Tencent. Based on these interviews, we document how the creation of automated journalism products is heavily dependent on the successful assembly of actor networks inside and outside organizations. While metrics for measuring the success of automated journalism are applied differently, they often center around the augmentation of existing reportorial activities and focus on replacing rote and mundane (human) work processes. Some of the biggest challenges in automated journalism lie in curating high-quality datasets and managing the associated high stakes of errors in a business defined by trust. Lastly, automated journalism can be seen as a form of experimentation, helping its protagonists to future-proof their respective organizations.

December 2023

Inequalities in Online Representation: Who Follows Their Own Member of Congress on Twitter?

Germans Savcisens,  Tina Eliassi-Rad, Lars Kai Hansen, Laust Hvas Mortensen, Lau Lilleholt, Anna Rogers, Ingo Zettler & Sune Lehmann

Tina Eliassi-Rad, Lars Kai Hansen, Laust Hvas Mortensen, Lau Lilleholt, Anna Rogers, Ingo Zettler & Sune Lehmann

Here we represent human lives in a way that shares structural similarity to language, and we exploit this similarity to adapt natural language processing techniques to examine the evolution and predictability of human lives based on detailed event sequences. We do this by drawing on a comprehensive registry dataset, which is available for Denmark across several years, and that includes information about life-events related to health, education, occupation, income, address and working hours, recorded with day-to-day resolution. We create embeddings of life-events in a single vector space, showing that this embedding space is robust and highly structured. Our models allow us to predict diverse outcomes ranging from early mortality to personality nuances, outperforming state-of-the-art models by a wide margin. Using methods for interpreting deep learning models, we probe the algorithm to understand the factors that enable our predictions. Our framework allows researchers to discover potential mechanisms that impact life outcomes as well as the associated possibilities for personalized interventions.

December 2023

Democrats are better than Republicans at discerning true and false news but do not have better metacognitive awareness

Mitch Dobbs, Joseph DeGutis, Jorge Morales, Kenneth Joseph, and  Briony Swire-Thompson

Briony Swire-Thompson

Insight into one’s own cognitive abilities is one important aspect of metacognition. Whether this insight varies between groups when discerning true and false information has yet to be examined. We investigated whether demographics like political partisanship and age were associated with discernment ability, metacognitive efficiency, and response bias for true and false news. Participants rated the veracity of true and false news headlines and provided confidence ratings for each judgment. We found that Democrats and older adults were better at discerning true and false news than Republicans and younger adults. However, all demographic groups maintained good insight into their discernment ability. Although Republicans were less accurate than Democrats, they slightly outperformed Democrats in metacognitive efficiency when a politically equated item set was used. These results suggest that even when individuals mistake misinformation to be true, they are aware that they might be wrong.

December 2023

Data Representation as Epistemological Resistance

Rahul Bhargava

Rahul Bhargava

Over the last two decades quantitative data representation has moved from a specialization of the sciences, economics, and statistics, to becoming commonplace in settings of democratic governance and community decision making. The dominant norms of those fields of origin are not connected to the governance and activism settings data is now used in, where practices emphasize empowerment, efficacy, and engagement. This has created ongoing harms and exclusion in a variety of well-documented settings. In this paper I critique the singular way of knowing embodied and charts and graphs, and apply the theories of epistemological pluralism and extended epistemology to argue for a larger toolbox of data representation. Through three concrete case studies of data representations created by activists I argue that social justice movements can embrace a broader set of approaches, practicing creative data representation as epistemological resistance. Through learning from these ongoing examples the fields of data literacy, open data, and data visualization can help create a broader toolbox for data representation. This is necessary to create a pluralistic practice of bringing people together around data in social justice settings.

December 2023

Stitching Politics and Identity on TikTok

Parker Bach, Adina Gitomer, Melody Devries, Christina Walker, Deen Freelon, Julia Atienza-Barthelemy,  Brooke Foucault Welles, Diana Deyoe, and Diana Zulli

Brooke Foucault Welles, Diana Deyoe, and Diana Zulli

Though a relative newcomer among social media platforms, social video-sharing platform TikTok is one of the largest social media platforms in the world, boasting over one billion monthly active users, which it garnered in just five years (Dellatto, 2021). While much of the early attention to the platform focused on more frivolous elements, such as its dances and challenges, the political weight of TikTok has become ever clearer. In the 2020 US election, Donald Trump’s plan to fill the 19,000-seat BOK Center in Tulsa was stymied by young activists who reserved tickets with no intention of attending, organized largely on TikTok (Bandy & Diakopoulos, 2020). In the years since, political discourse on TikTok has continued to emerge from everyday users and political campaigns alike (see Newman, 2022), even as TikTok itself has become an object of political contention: calls for banning the app in the United States–citing security concerns influenced by xenophobia, given the app’s Chinese ownership–began in the Trump presidency (Allyn, 2020) and have recently culminated in state- and federal-level bans on the app for government-owned devices in the U.S. (Berman, 2023). While some studies have navigated limited data access and the platform’s relative infancy to offer an examination of political TikTok (see Literat & Kligler-Vilenchik, 2019; Medina Serrano et al., 2020; Vijay & Gekker, 2021; Guinaudeau et al., 2022), there remains a significant need for more analysis and theorization of how TikTok can become both a site for political discourse and a feature caught up within political mobilization. This panel seeks to bring together emerging work that deals with political participation on TikTok, in order to share current wisdom and forge future research directions. The presented works specifically focus on the relationship between political participation on TikTok and political identity for three primary reasons. First, as a video-based and thus embodied platform (Raun, 2012), creator identity is more prominent and easily perceptible in the visual and auditory elements of TikTok videos than in the primarily text-based posts on platforms like Twitter and Facebook. Second, TikTok relies more heavily on its recommendation algorithm for content distribution than its competitors traditionally have (Kaye et al., 2022; Cotter et al., 2022; Zeng & Kaye, 2022; Zhang & Liu, 2021), leading to the creation of “refracted publics” (Abidin, 2021) or Gemeinschaft-style communities (Kaye et al., 2022) around users’ common interests, which may include and/or be heavily informed by identity. Third, TikTok has long prioritized and found success with Generation Z and younger users more broadly (Zeng et al., 2021; Vogels et al., 2022; Stahl & Literat, 2022), which has made generational identity extremely salient on the app, while also implicating political identity, as young people tend to hold political beliefs more cognizant and accepting of diverse identities than older generations (Parker et al., 2019). The papers in this panel consider a wide range of identity characteristics of TikTok users and how these identities shape and are shaped by political discourse on TikTok. Paper 1 builds on TikTok’s targeting of Gen Z, considering the identities of age and generation through a content analysis of political remix on TikTok to uncover how younger users use TikTok for political activism as compared to their older counterparts, and finding evidence that TikTok is a powerful site of collective action. Also building from TikTok’s appeal to GenZ, Paper 2 presents a digital ethnographic analysis of the Trad-Wife phenomena on TikTok, offering that TikTok quietly (and thus insidiously) offers space for the cultivation of Christian Nationalist, ‘gentle fascisms’ within GenZ women, often without mention of ‘politics’ at all. Paper 3 offers a computational content analysis of political posts on TikTok with a focus on the interactions between identity and partisanship, and particularly the ways in which creators of marginalized identities on the right act as identity entrepreneurs, offering conservative critiques of their identity groups in ways which find popularity among conservative audiences of hegemonic identities. Finally, Paper 4 looks at differences in how TikTok users respond to male and female politicians’ TikTok videos using a combination of computational and qualitative methods, with exploratory analysis suggesting that male politicians receive more neutral and positive comments than female politicians. By focusing on identity and political discourse on TikTok, we recognize the wide range of political activity occurring on a platform often denigrated as frivolous, and foreground the importance of identity characteristics to the technological and social shaping of these dialogues.

November 2023

Generative AI and User-Generated Content: Evidence from Online Reviews

Samsun Knight and  Yakov Bart

Yakov Bart

How has generative artificial intelligence affected the quality and quantity of user-generated content? Our analysis of restaurant reviews on Yelp.com and product reviews on Amazon.com shows that, contrary to prior lab evidence, use of generative AI in text generation is associated with significant declines in online content quality. These results are similar both in OLS and in two- period differences-in-differences estimation based on within-reviewer changes in AI use. We also find that use of generative AI is associated with increases in per-reviewer quantity of content, and document heterogeneity in the observed effect between expert and non-expert reviewers, with the strongest declines in quality associated with use by non-experts.

November 2023

Powerful in Pearls and Willie Brown’s Mistress: a Computational Analysis of Gendered News Coverage of Kamala Harris on the Partisan Extremes

Meg Heckman,  Rahul Bhargavaa, and Emily Boardman Ndulue

Rahul Bhargavaa, and Emily Boardman Ndulue

This study uses a mix of traditional and computational content analysis to track digital news coverage of U.S. Vice President Kamala Harris during and immediately after the 2020 general elections. It’s well documented that female politicians, especially women of color, face biased coverage in the political press. In this paper, we explore how these dynamics play out in the modern hyper-polarized digital media landscape. Our corpus includes roughly 17,000 stories published online between August 2020 and April 2021 by news organizations we categorized along a binary axis of partisanship between Republican and Democrat. Our findings show that sexist, racialized coverage of Harris is most prevalent in news sources shared by mostly Republican voters. Coverage in news sources shared by registered Democratic voters, meanwhile, tended to treat Harris as a celebrity, often fixating on her wardrobe and personal life. We ponder implications for both gender equity in civic life and future feminist media scholarship.

September 2023

Interactive Visualization of Plankton – Mediated Nutrient Cycling in the Narragansett Bay

Avantika Velho,  Pedro Cruz, David Banks-Richardson, Gabrielle Armin, Ying Zhang, Keisuke Inomura, and Katia Zolotovsky

Pedro Cruz, David Banks-Richardson, Gabrielle Armin, Ying Zhang, Keisuke Inomura, and Katia Zolotovsky

Microbial plankton play a critical role in global biogeochemical cycling, and while a vast amount of genomics data has been collected from microbial communities throughout the ocean, there remains a gap in connecting this data to ecosystems and biogeochemical cycles. One key question is how different genotypes influence an organism’s environmental impact? Here, we scientists and designers have collaborated to develop an interactive visual tool that helps to better understand the relationships between microbial ecosystems and global biogeochemical cycling.

August 2023

Measuring Disparate Outcomes of Content Recommendation Algorithms with Distributional Inequality Metrics

Tomo Lazovich, Luca Belli, Aaron Gonzales, Amanda Bower, Uthaipon Tantipongpipat, Kristian Lum, Ferenc Huszár, and Rumman Chowdhury

Tomo Lazovich, Luca Belli, Aaron Gonzales, Amanda Bower, Uthaipon Tantipongpipat, Kristian Lum, Ferenc Huszár, and Rumman Chowdhury

The harmful impacts of algorithmic decision systems have recently come into focus, with many examples of machine learning (ML) models amplifying societal biases. In this paper, we propose adapting income inequality metrics from economics to complement existing model-level fairness metrics, which focus on intergroup differences of model performance. In particular, we evaluate their ability to measure disparities between exposures that individuals receive in a production recommendation system, the Twitter algorithmic timeline. We define desirable criteria for metrics to be used in an operational setting by ML practitioners. We characterize engagements with content on Twitter using these metrics and use the results to evaluate the metrics with respect to our criteria. We also show that we can use these metrics to identify content suggestion algorithms that contribute more strongly to skewed outcomes between users. Overall, we conclude that these metrics can be a useful tool for auditing algorithms in production settings.

August 2023

What's in the Black Box? How Algorithmic Knowledge Promotes Corrective and Restrictive Actions to Counter Misinformation in the USA, the UK, South Korea and Mexico

Myojung Chung

Myojung Chung

While there has been a growing call for insights on algorithms given their impact on what people encounter on social media, it remains unknown how enhanced algorithmic knowledge serves as a countermeasure to problematic information flow. To fill this gap, this study aims to investigate how algorithmic knowledge predicts people’s attitudes and behaviors regarding misinformation through the lens of the third-person effect.

August 2023

What We Owe to Decision-Subjects: Beyond Transparency and Explanation in Automated Decision-making

David Gray Grant, Jeff Behrends, and  John Basl

John Basl

The ongoing explosion of interest in artificial intelligence is fueled in part by recently developed techniques in machine learning. Those techniques allow automated systems to process huge amounts of data, utilizing mathematical methods that depart from traditional statistical approaches, and resulting in impressive advancements in our ability to make predictions and uncover correlations across a host of interesting domains. But as is now widely discussed, the way that those systems arrive at their outputs is often opaque, even to the experts who design and deploy them. Is it morally problematic to make use of opaque automated methods when making high-stakes decisions, like whether to issue a loan to an applicant, or whether to approve a parole request? Many scholars answer in the affirmative. However, there is no widely accepted explanation for why transparent systems are morally preferable to opaque systems. We argue that the use of automated decision-making systems sometimes violates duties of consideration that are owed by decision-makers to decision-subjects, duties that are both epistemic and practical in character. Violations of that kind generate a weighty consideration against the use of opaque decision systems. In the course of defending our approach, we show that it is able to address three major challenges sometimes leveled against attempts to defend the moral import of transparency in automated decision-making.

August 2023

Rawls and Antitrust’s Justice Function

Elettra Bietti

Elettra Bietti

Antitrust law is more contested than ever. The recent push by the Biden Administration to re-orient antitrust towards justice and fairness considerations is leading to public backlash, judicial resistance and piecemeal doctrinal developments. The methodological hegemony of welfare maximizing moves in antitrust makes it theoretically fragile and maladaptive to change. To bridge disagreements and overcome polarization, this Article revisits John Rawls’ foundational work on political and economic justice, arguing that it can facilitate consensus and inform the present and future of antitrust law.